The PAYNEful Portfolio – Creating a New YouTube Subscription Feed (or How to Replace Your YouTube Subscription Feed Now Google Has Ditched It)

Note: if you’re looking for code, there’s a link at the bottom of this article where you can get a copy. This article just explains what the code does.

About a year ago, Google mentioned casually that they would be getting rid of YouTube subscription feeds1. Earlier this year they finally made good on their threat and turned the feed off for good. This wasn’t a big problem for many people who don’t use RSS in their daily lives2, but for me it was a damn nuisance as I like all my updates in one place.

Google courteously explained that although the main YouTube feed was going away, you can still access individual channel feeds. No problem, right?

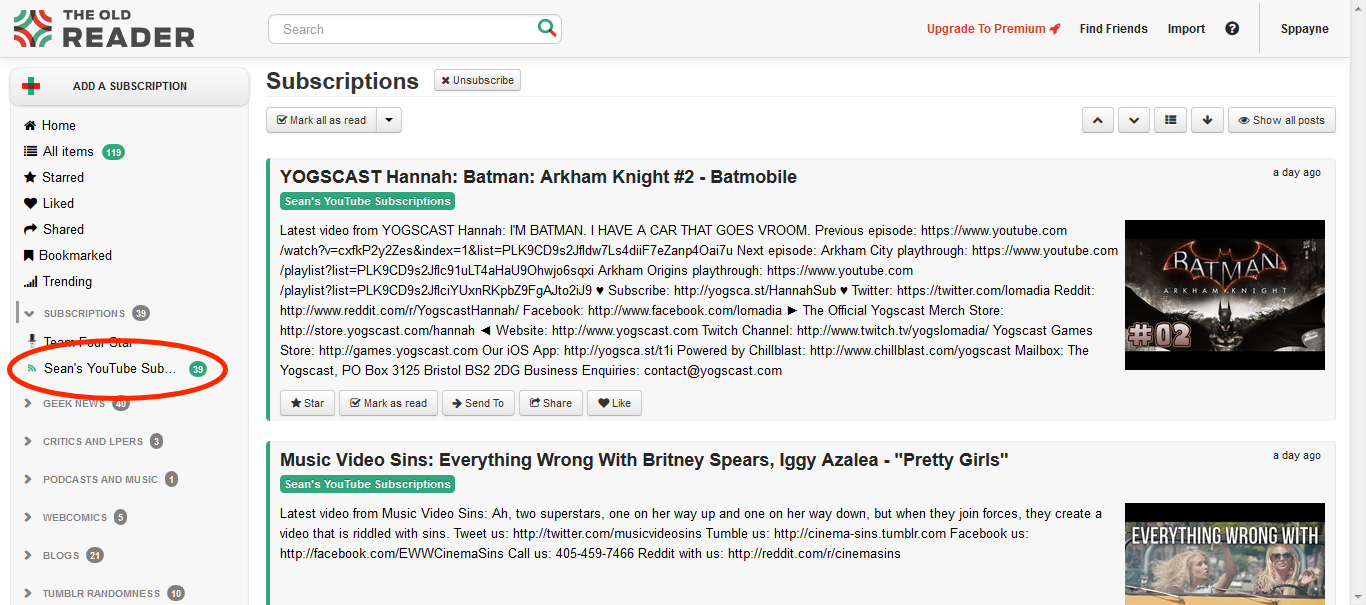

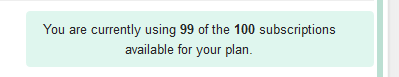

My preferred feed reader, the Old Reader, has a limit of up to 100 subscriptions on the free plan, and I have 435 subscriptions on YouTube alone. Please understand that I don’t have a TV licence as most of my video entertainment comes via YouTube and other online outlets, so it’s like being subscribed to 400+ channels that I actually want rather than paying Sky or Virgin for 900+ channels that I don’t want!

I refuse to pay money for extra subscriptions just because Google want to drop a service, so I decided to just build a subscription feed myself. How hard can it be, right?

Fetching the Feeds

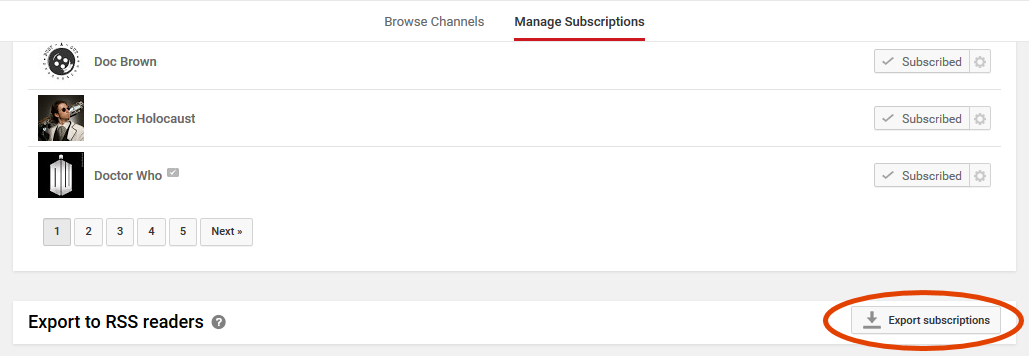

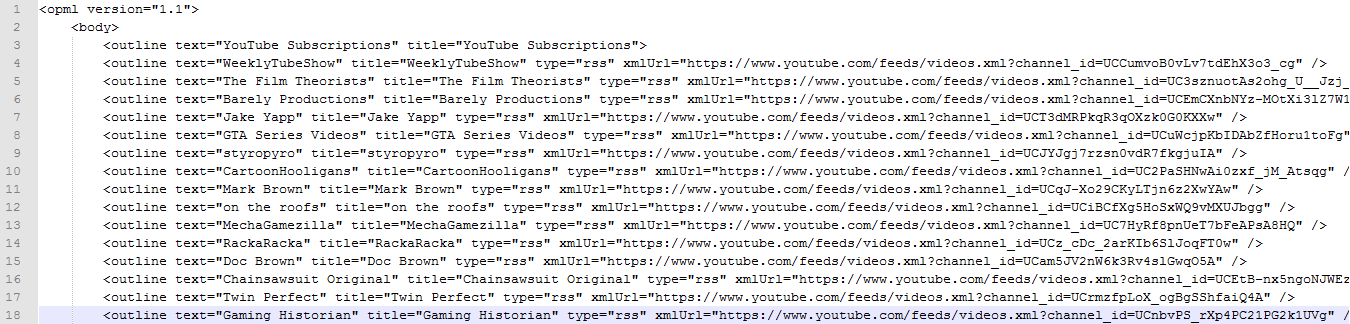

Google did one good thing at least – at the bottom of everyone’s YouTube subscriptions management page is a button allowing you to export all your channel subscriptions to one OPML file. This file would make the basis of my new feed.

The file itself just contains data pertaining to where all the individual feeds are and some meta data (titles, channels, etc.).

My plan was to have a URL3 that the Old Reader can invoke and pull all the new items from. I know that the Old Reader is clever enough to know which links it has presented before, so all I needed to do was make sure that fresh links within the last day or two were showing and the reader would take care of the rest.

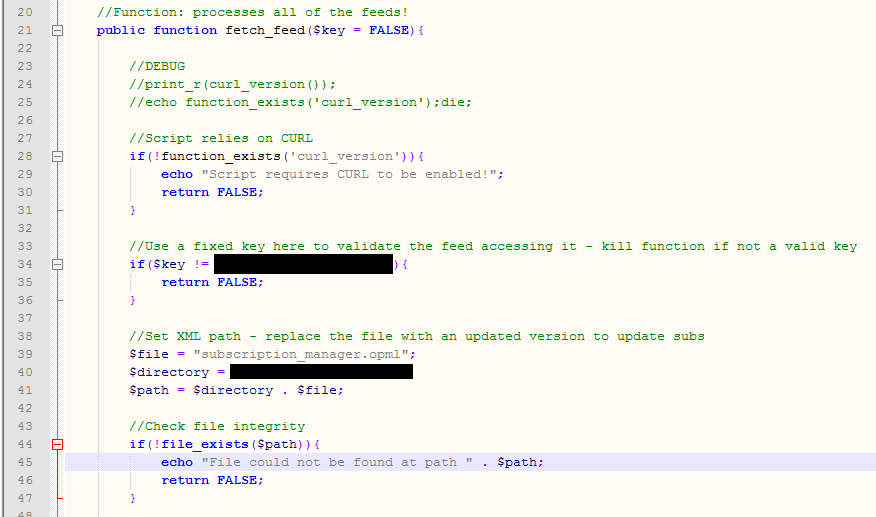

My website is built (mostly) in CodeIgniter, so I created a new controller class devoted to processing all my feeds. I hard-coded a “key” to pass in to prevent people other than my feed reader from accessing my subscriptions, and pointed the script to look at the folder where I would keep my current OPML file. Note that I am hard-coding a lot of things here which isn’t 100% in the spirit of “flexible code”, but it’s going to serve a specific purpose for me rather than being open to the public so it will do!

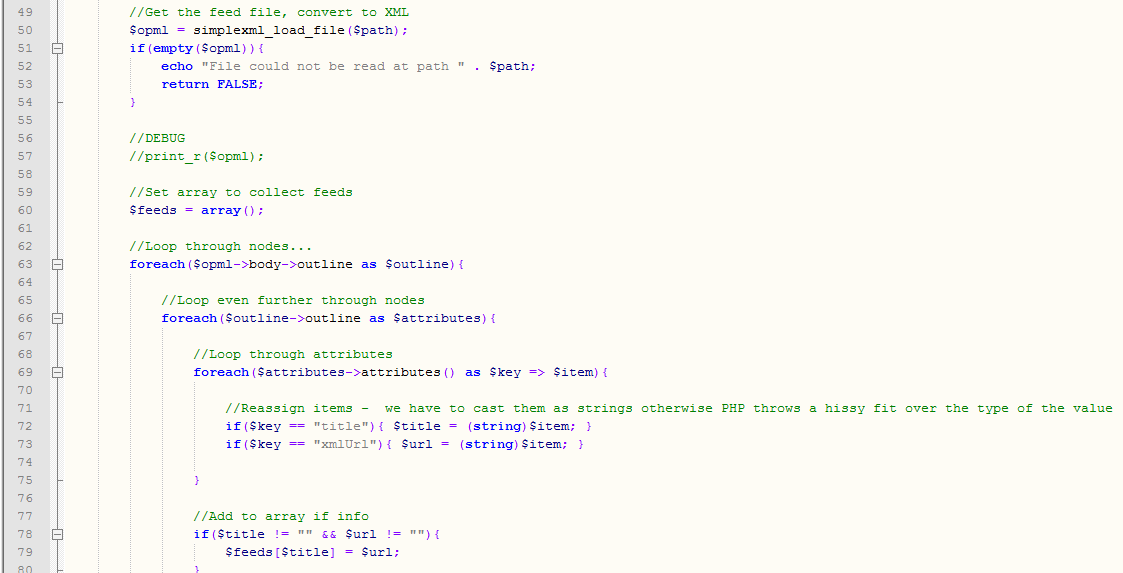

Unlike the horrendous non-standard markup I encountered when trying to process bookmark files, Google’s OPML is well-structured which means we can use the proper XML parsing tools built into PHP! We load the contents of the file into a SimpleXML object and loop through to collect the two most important items we need per YouTube channel – the title of the channel and the URL location of where the relevant feed can be found. Note that we have to “cast” the node into a string as PHP will try (and fail in) reassigning the object node to a variable otherwise.

We now have an array of feed URLs. I pass this into a function called _process_feeds() and inside that function we will now need to do the following:

- Loop through the array

- Request each feed URL and get the contents of the RSS tied to them

- Loop through the first two videos on each feed and check the date – if the most recent videos are older than 2 days we move on to the next feed and do the same

- If the videos have been added within the last two days, get all the juicy details and stick them into a results array

- Sort the array based on timestamp and return it

If _process_feeds() returns an array with some items in it, we have some feed material! The best part was that I already had an RSS template built from when I created an RSS feed that amalgamates some WordPress feeds and a Twitter feed into one RSS. I simply load this existing view and pass in the collected the feed data and hey, presto! One YouTube subscriptions feed.

The Data Collection Timeout Problem

It looked good to go, so I uploaded all the files to my web server and plugged the URL into the Old Reader…

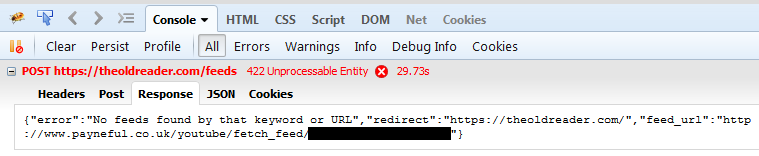

The Old Reader refused to register the URL as valid RSS. After a bit of tinkering, I managed to figure out why: Firebug revealed that the Old Reader would query a RSS feed for 30 seconds before timing out and presenting an error.

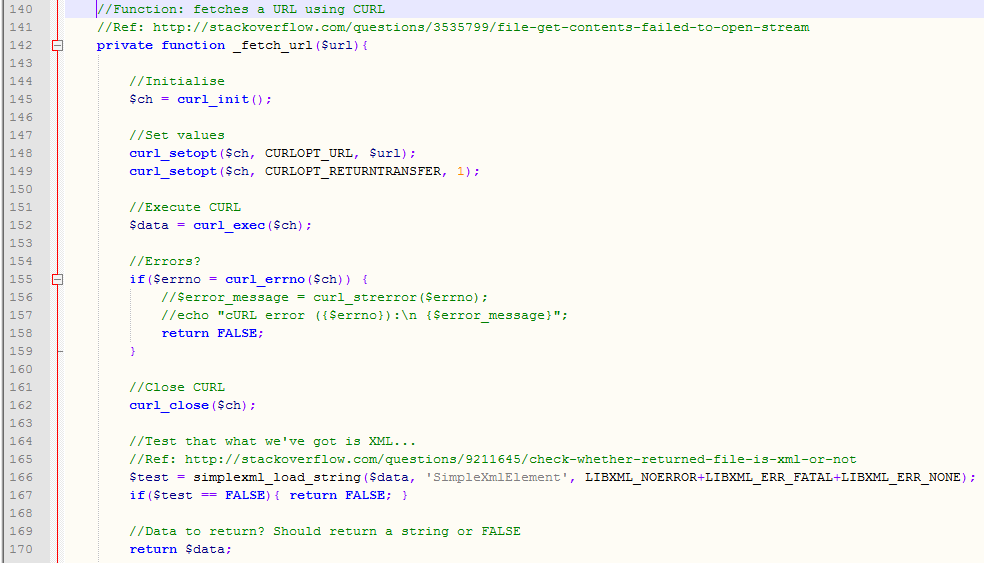

The sheer amount of data was causing my script to take about one minute and thirty seconds to run due to the amount of web calls it was making. Some investigation into optimising the calls suggested I should replace PHP’s file_get_contents() function with CURL if it’s installed on my server. After a quick test to determine I could use CURL, I wrote a small function to get the feeds using CURL and ran the script to see what the difference was.

I will be honest, there was no noticeable difference in speed when using CURL. So, without learning a leaner machine language to process the feeds with4, what could I do?

Utilising the Power of Cron

Rather than try and pull all the feed items live from the function, why not just dump out the RSS to a file and present that file’s address to my feed reader? The feed reader would query the static file and, as long as the contents were regularly updated, it should pick up new items.

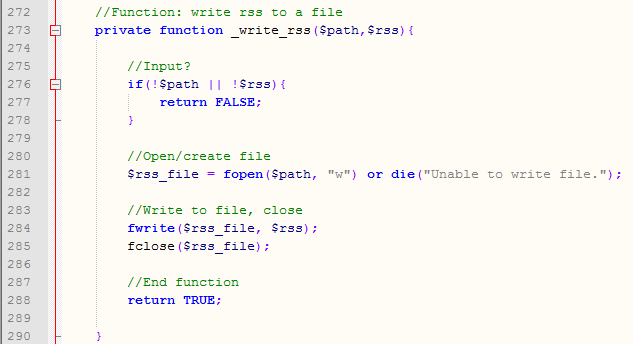

A quick Google search revealed that I could load the contents of a CodeIgniter view into a variable. I would then dump this out into a file somewhere on my server and let the feed reader do the rest.

I then set up a cron job to run the main process_feeds() function once every six hours to refresh the contents of my RSS file, which is fairly simple as CodeIgniter has rudimentary CLI functionality5.

As an aside, for some bizarre reason my hosting provider’s cron panel just didn’t like the supposed CodeIgniter CLI invocation format at all. A line like this…

0 */6 * * * php -q [FILE PATH]/index.php youtube fetch_feed [KEY] >/dev/null 2>&1

…that should have worked fine just returned “content type: text/html” and refused to output anything else. It ran fine on a Linux box via the terminal and I spent a lot of time commenting out bits and pieces to see if it was my unique setup of CodeIgniter integrated with WordPress causing the problem. In the end, I found this excellent article and set up a separate cli.php file which worked just fine, invoked with the following cron:

0 */6 * * * php -q [FILE PATH]/cli.php "youtube/fetch_feed/[KEY]" >/dev/null 2>&1

If you can get away with using CodeIgniter’s “built-in” CLI interface, then bully for you!

The Static File Problem

To recap, I now have a static .rss file that updates every six hours with new videos from the last two days. Yet, there’s a problem. Beyond the initial import, the Old Reader refuses to register any new videos. It is particularly frustrating since at this point I am regularly checking the .rss file and it definitely has new videos showing in it.

After some research, it appears that my previous assumption6 that feed readers would just scan for RSS nodes with a recent date was incorrect. On reflection, it’s a little bit obvious: RSS feed aggregators could scan feeds for certain dates based on the last update date, but that’s a lot of processing to fetch a feed and check the videos, only to find there are no new ones.

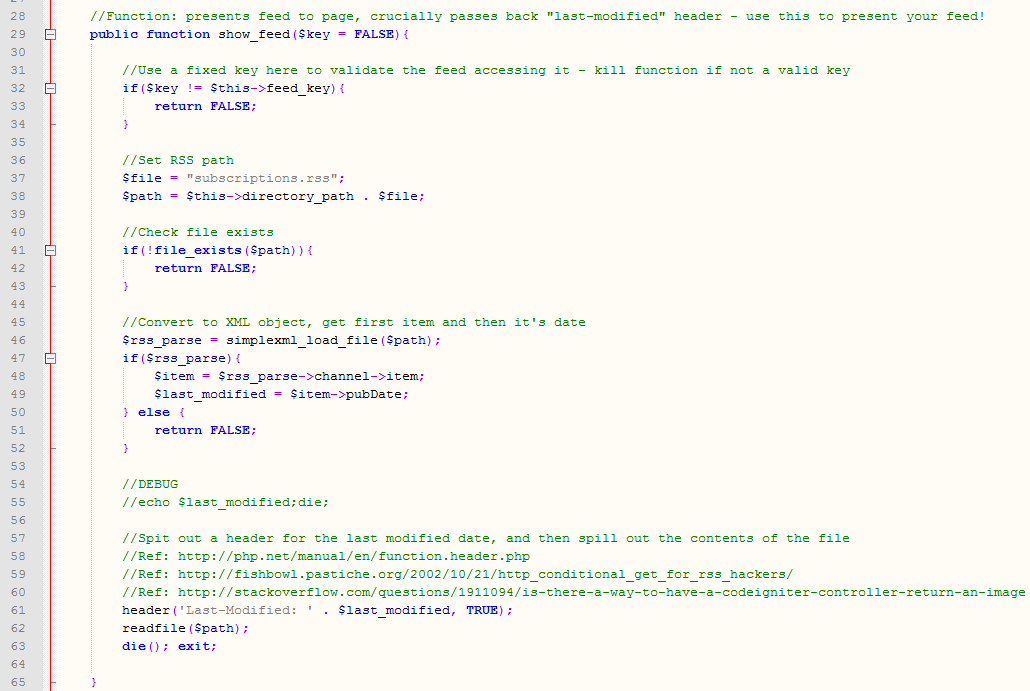

No, instead a lot of services will apparently send a HTTP7 request and if the page returns specific headers as to when the page was last updated it will then poll for new content.

I’ll admit it now, my understanding of headers is fuzzy at best. I know it is invisible information passed to a server before a web page request, normally replying in the form of a response code, and that it can contain a lot of information pertaining to how a page should behave; beyond that, it is currently a bit of a mystery to me. I have previously set headers for a page when I wanted certain files to be prompted as a “download now” (see the HTML bookmark sorter article for an example), when redirecting as PHP can send a redirect condition to go to a new page, or even something as simple as a gesture towards your favourite recently-deceased author by placing his name in the headers of your website via .htaccess8.

I wrote a new function called show_feed() as part of the class, which returns the contents of the RSS file I generated and prefixes it with a header providing the date of the latest video in the feed. Then I just output the contents of the RSS feed. It was a bit trial and error and I had to use a few online resources to fathom it out, which I have referenced in the final script.

At first my feed reader did not want to update at all. Then I forced a refresh and then, lo and behold, on the following day I found the following in my feed reader…

Potential Improvements and the Code Base

There are a few benefits to my feed script:

- The YouTube subscriptions feed used to have a massive image and no author in the title, so it looked ugly as hell. Every video in my feed script prefixes the video and description with the channel name for quick reference, and the image is now thumbnail-sized on the right.

- At some point towards the end of the YouTube subscription’s lifespan there were rumours of favouritism in what video were shown i.e. if you tended to click on videos from certain channels, the feed would then favour those channels over others9. I suspect this was to lower the amount of unnecessary processing, as developing this script has taught me that collecting and collating information from so many web resources is quite an intensive process – I can only imagine what the resource usage was for the Google team in providing RSS subscription feeds for 1 billion+ people10. My feed script has no favouritism at all, it just collects videos: if you get fed up of a channel, just remove them from the .OPML file!

However, there are also quite a few flaws in this script:

- The script only checks two videos per feed11 to keep the processing time down – if a channel uploads five videos in a short amount of time you’ll probably miss a few of them! There’s a variable called “vid_limit” that can be adjusted to increase this amount, but be aware that it will increase processing time.

- If you have a lot of subscriptions the script will take a while to process them. Most of the bottleneck happens around all the web calls it makes. I can certainly understand why Google were keen to stop offering this sort of feed service if it was half as intense on their servers!

- There’s a lot of hard coding here and reliance on a static file being in a certain location – the script could certainly be adapted to utilise a database to store the feed locations and then have some sort of interface for adding YT channels to it.

I would love to be able to offer this YouTube subscription feed service on my website (like the bookmark sorter) but it might prove costly in terms of bandwidth. Instead, I have placed all the code on GitHub into a repository. Please help yourself to it if it is of any value to you. It’s been designed to run in CodeIgniter but I imagine it would be quite simple to convert it to another MVC framework or just as a series of procedural static functions.

Get the code for the YouTube Subscription Amalgamator here.

If you have any suggestions on how to improve my script, please let me know with a comment below (you can login using most popular social media accounts) or get in touch on GitHub (in which case please bear with me while I learn the process – I have used GitHub for versioning but not so much for collaboration!).

Postscript (16/07/2015)

This doesn’t have anything to do with the subscription feed, but I updated my CodeIgniter framework to 3.0 this week and, lo and behold, the script stopped running via the command line. My “hacked” cli.php file didn’t work, and using other methods proved fruitless. It seems that a few people have the same problem with using CI via CLI on my hosting provider.

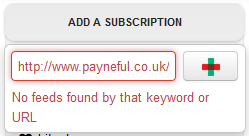

Luckily, I found a forum post where a clever sod named “ZoomIt” suggested using Wget instead. I’m just trying to load the controller function fetch_feed(), after all! I added a modifier to suppress the output (dump it out to the black hole of /dev/null) and hey, presto! One working feed generator, and this cron issue didn’t take a week to resolve like last time. The resulting cronjob looked like the following:

wget -qO- http://www.payneful.co.uk/youtube/fetch_feed/[KEY] &> /dev/null

It’s not as neat as invoking the PHP on the command line and a few forum posts recommended CURL instead as Wget is more for copying file output, but Wget works so I’m happy again.

- Google appears to have some animosity towards RSS and feeds in general, given that they went and scrapped Google Reader with little regard for the many people who used it. ↩

- The poor fools, why fruitlessly visit websites that may not have updated when you can get the updates to come to you? ↩

- I’m sure someone might suggest that I actually mean “URI” but it’s an area that seems needlessly complicated and, at the end of the day, it’s a web address. ↩

- I understand that proper programming languages like C or Java could blitz what I’m trying to do in no time, as PHP is notoriously cumbersome when it comes to intense collation and collection. ↩

- Command Line Interface refers to running scripts or programs via the computer’s native parser e.g. the cmd tool in Windows or the terminal in Linux. ↩

- Well, to assume does make an ass out of u and me. I didn’t do any prior research so I definitely assumed, not presumed (don’t worry, I looked it up too). ↩

- HyperText Transfer Protocol. See, all those years at university paid off! I didn’t even have to look that one up! ↩

- .htaccess is just an extra layer on “how things should behave on your server”, and is crucial for redirecting, among other things. ↩

- I agree with the idea that there was favouritism, but I posit that it was the data aggregation that was costly to Google rather than wanting to provide “relevant content”. I genuinely think it was more a case that they were spending on intensive resources they did not want to provide as 10% of people were only using the sub feeds directly. ↩

- I suspect my script is very similar to how the YouTube “subscriptions” page now works, as I have now had several instances where I click “load more videos” only to see duplicates of videos already being shown on the page, which would only occur if you’re trying to pull from multiple feeds. The original subscription page, in all likeliness, was pulling from the original YouTube subscription video feeds. ↩

- I have now updated this in my personal feed to three videos per feed, but the choice is yours if you use my script. ↩

Post by Sean Patrick Payne+ | July 3, 2015 at 11:00 am | Articles, Portfolio and Work, Projects, Projects, Technology | No comment

Tags: Google, PHP, Please don't hate me for my horrible code, RSS, XML, YouTube